A Machine Learning Guide to HTM

The research coming out of Numenta, which combines biological and machine intelligence, is fascinating. The insights are both intriguing as well as important for future understanding of how humans interact with their environment.

Considering the extensive scope of the Numenta research, it may not be easy to understand, from a pure machine learning point of view, the concepts related to neuroscience and computer science. This is especially true with the important updates done throughout the years for general artificial intelligence theory and its algorithmic implementations.

Thus, this single-entry-point, easy-to-follow, and reasonably short guide to the HTM algorithm comes in handy for people who have never been exposed to Numenta research but have a basic machine learning background.

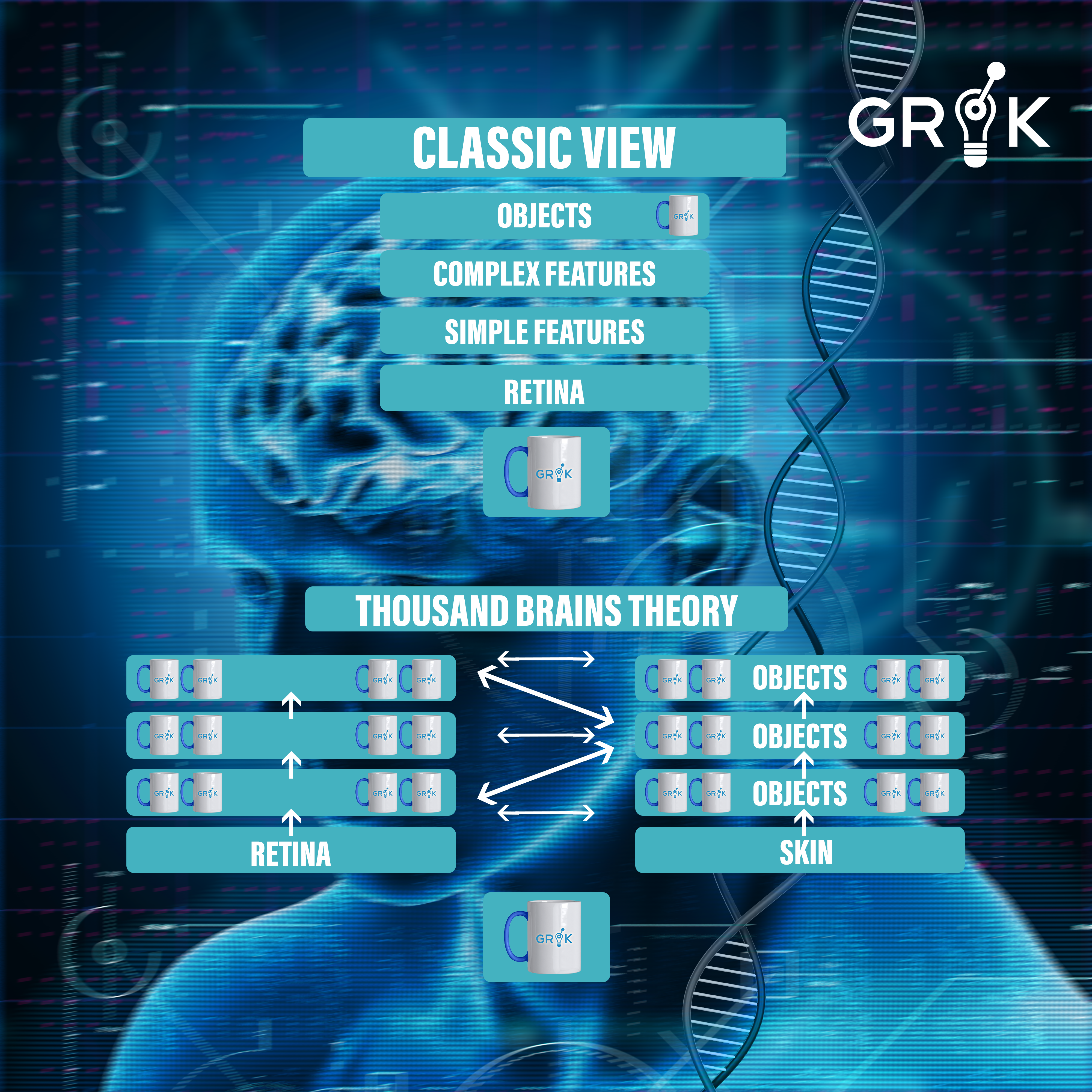

Before exploring in depth the HTM (Hierarchical Temporal Memory) algorithm details and its similarities with state-of-the-art machine learning algorithms, it is important to note the Thousand Brains Theory of Intelligence by Jeff Hawkins, a theory that encapsulates Numenta research for more than 15 years. The Thousand Brains Theory of Intelligence suggests that instead of learning one model of a concept (or an object), the brain creates many models of each subject. It is a biologically inspired theory of intelligence built through the reverse-engineering of the neocortex.

The algorithmic implementation and empirical validation in silicon of key components of the theory are the HTM, and these key components of which are as follows:

The Thousand Brains Theory of Intelligence

The Thousand Brains Theory is the culmination of almost two decades of neuroscience research at Numenta and the Redwood Neuroscience Institute (founded in 2002 by Jeff Hawkins). It is the core model-based, sensory-motor framework of intelligence that provides a distinct interpretation of the high-level thought processes that happen throughout the neocortex. As a result, it gives rise to intelligent behaviors.

Though extensively studied and proven by both anatomical evidence and functional neurological findings, this framework suggests a unique explanation for how the cortex represents object compositionality. This framework also applies to object behaviors and even to high-level concepts and suggests a basic functional mechanism that is tightly replicated across the cortical sheet – a cortical column.

The idea that a mechanism like the cortical column is present and functioning in the framework leads to the hypothesis that every cortical column learns complete models of objects. Unlike traditional hierarchical ideas in deep learning, where objects are learned only at the top, the theory proposes that there are many models of each object distributed throughout the neocortex (hence the name of the theory).

This framework may provide some essential insight into future machine learning developments. The temporal and location-based nature of its design, as well as how it disrupts traditional hierarchical integration with multi-modal data sources, are noteworthy features

(Fig 1). The Thousand Brains Theory of Intelligence suggests that there are long-range connections within the brain’s neocortex that allow models from different parts of the cortical environment to come together to produce a more or less accurate and useful perception of reality.

The HTM algorithm is derived from the well-defined and fundamental principles of Thousand Brains Theory. It focuses specifically on three areas which are

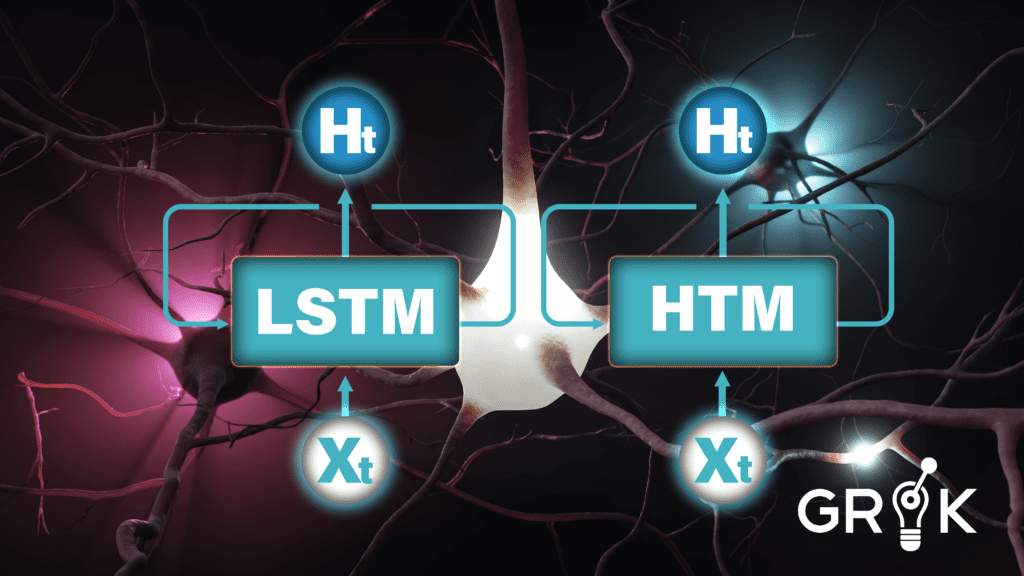

Although at a higher level and different in approach and learning rule, HTM shows promise for integrating with RNN (Recurrent Neural Networks). The higher level of knowledge that can be associated with Hierarchical Temporal Memory is what makes them applicable for sequence learning modeling. Known RNN incarnations such as LSTMs or GRUs have the ability to capture sequences.

Fig.2 The use of looping in Recurrent Neural Networks as Hierarchical Temporal Memories allows information to persist and is well-suited for attacking sequence learning problems.

RNNs distinguish themselves from HTMs as follows:

HTMs can be implemented in a powerful and highly efficient way. By contrast, however, in the case of strongly dimensional input patterns, HTMs may attempt to solve the credit-assignment problem if stacked in a multi-layer deployment as Multi-Layer LSTMs or Convolutional LSTMs.

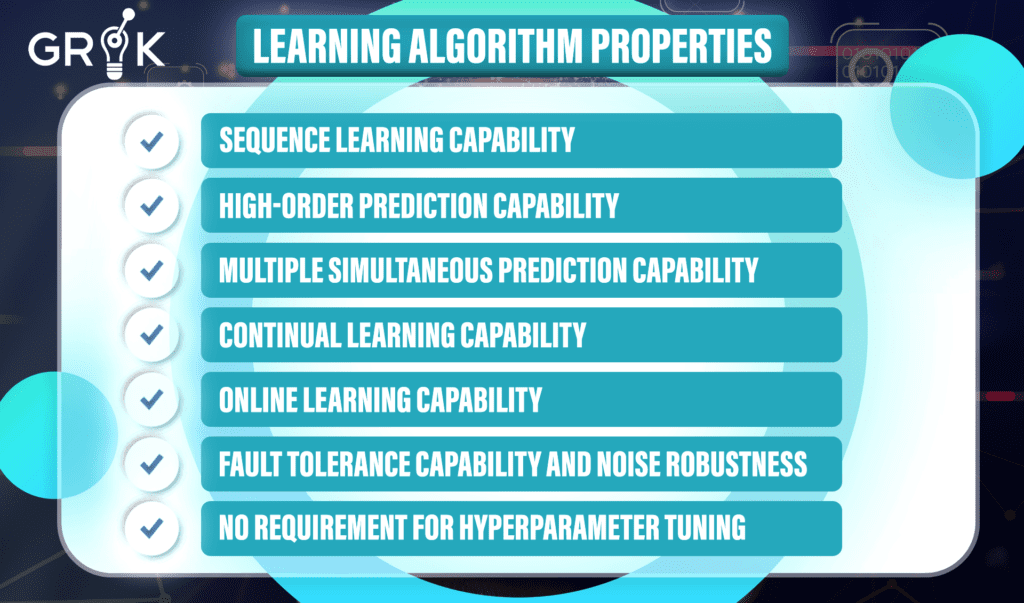

Despite this minor drawback, the Hierarchical Temporal Memory (HTM) algorithm supports by design several properties every learning algorithm should possess:

Sequence Learning Capability

Intelligence requires the ability to make predictions about what will happen next based on experience. This is an essential property of intelligence that both biological and artificial systems possess and answers the basic question: “What comes next?” Besides static spatial information, a machine learning algorithm should also be able to ground its prediction in time to provide a valuable forecast.

High-order Prediction Capability

High-order predictions are essential for real-world sequences because they contain contextual dependencies that span multiple time steps. The term “order” is the Markov order that is the minimum number of the previous state(s) the algorithm requires to arrive at precise predictions. Ideally, an algorithm should learn the order automatically and efficiently.

Multiple Simultaneous Prediction Capability

Many outcomes are possible for any given temporal context. When there is uncertainty in the data, a single best prediction can be difficult to determine. For an algorithm to make accurate decisions, it needs more than just one possible future event but rather must generate numerous predictions simultaneously and review the likelihood of each prediction online. The algorithm will output the distribution of possible future outcomes.

Continual Learning Capability

The data stream is constantly changing, so the algorithm needs to be able to learn from it and adapt quickly. Continual Learning is an important property that allows for efficient processing of continuous real-time streams but has not yet been thoroughly studied in machine learning mainly because it requires stored and processed data from previous encounters.

Online Learning Capability

It is much more useful if the operative algorithm can anticipate and assimilate new patterns as they present themselves without having to store complete sequences or group various series together as is necessary in the training of gradient-based recurrent neural networks. The ideal learning algorithm should be able to learn from one pattern at a time, which will make it more efficient and more nimble as the natural stream unfolds.

Fault Tolerance Capability and Noise Robustness

Real-world sequence learning processes noisy data sources where sensor noise, transmission errors, and device limitations often produce inaccuracies or gaps in information. A good algorithm should exhibit robust resistance to this type of distortion in results.

No requirement for hyperparameter tuning

Most machine-learning algorithms require optimizing hyperparameters. This process is usually guided by performance metrics on a cross-validation dataset. It involves searching through the hyperparameter space, typically a manually specified subset of it. For applications that need a high level of automation such as data stream mining, hyperparameter tuning proves to be challenging. Ideally, as in the case of HTM, the operative algorithm should demonstrate efficient performance on an extensive range of problems without any task-specific hyperparameter tuning.

The Hierarchical Temporal Memory (HTM) algorithm excels in Continual Learning Capability, Online Learning Capability, Fault Tolerance Capability and Noise robustness, and No hyperparameter tuning although these properties are extremely challenging to satisfy in a cutting-edge recurrent neural network.

With AI researchers already switching their perspective to look for new original approaches, Numenta has uniquely positioned itself to be at the forefront of the next wave of neuroscience-inspired AI research.

The HTM algorithm demonstrates how a neuroscience-grounded theory of intelligence may inform the development of future AI systems in order to develop more advanced behaviors.